FAQ

FAQ

Coveo Machine Learning (Coveo ML) is a service that leverages usage analytics data to deliver relevant search results and proactive recommendations (see Coveo Machine Learning). Coveo ML offers a few model types meant to improve content relevancy using predictive models to make recommendations.

This article contains some questions you may ask yourself about Coveo ML.

General

How does Intelligent Term Detection (ITD) work?

A typical Natural Language Processing (NLP) application extracts terms based on linguistic rules and word frequency.

In addition to traditional NLP methods, Coveo Machine Learning (Coveo ML) leverages vocabulary previously employed by end users to search for content, therefore providing a more accurate picture of what’s contextually relevant to the current end user.

A typical basic query expression (q) (that is, what the end user types in a search box) contains an average of four keywords.

However, some use cases require much larger chunks of text to be used as input for queries, such as an entire support case description.

The default query processing algorithm isn’t designed to deal with that many keywords.

Therefore, Coveo ML provides an Intelligent Term Detection (ITD) algorithm to extract only the most relevant keywords from these large strings of text. ITD extracts these keywords from both the q and large-query-expression-abbr, and then injects them into the lq.

To do so, ITD uses a set of usage analytics events (search and click) recorded for a search interface:

-

It selects up to 2,500 queries that generated a positive outcome (that is, a query result was opened) and were performed at least five times. The selected queries are called top user queries.

NoteIf there are more than 2,500 queries that were made five or more times, then ITD selects the 2,500 most popular ones.

-

It establishes a correlation between the top user queries and the keywords contained in the

qand thelq(for example, support case description). -

It finds the five most relevant terms in the

qand thelqbased on the average importance of each term (see TFIDF), and then replaces thelqexpressions with a partial match of the top expressions identified by the model. These five terms are called the refined keywords.Leading practiceTo review refined keywords in usage analytics reports, you must create a custom usage analytics dimension with the following configuration:

-

API name:

refinedkeywords -

Type:

text -

Related events:

Search,Click,Custom event

-

-

It overrides the original

lqwith the refined keywords before the query is executed against the index.

|

|

Notes

|

How do I enable ITD?

To enable ITD, you must first ensure that you created an ART model and that the model is active and available.

Once the model is created and active, you can associate the model with the query pipeline to which your search interface traffic is directed.

When associating your ART model with a query pipeline, you must select the Comply with Intelligent Term Detection (ITD) checkbox.

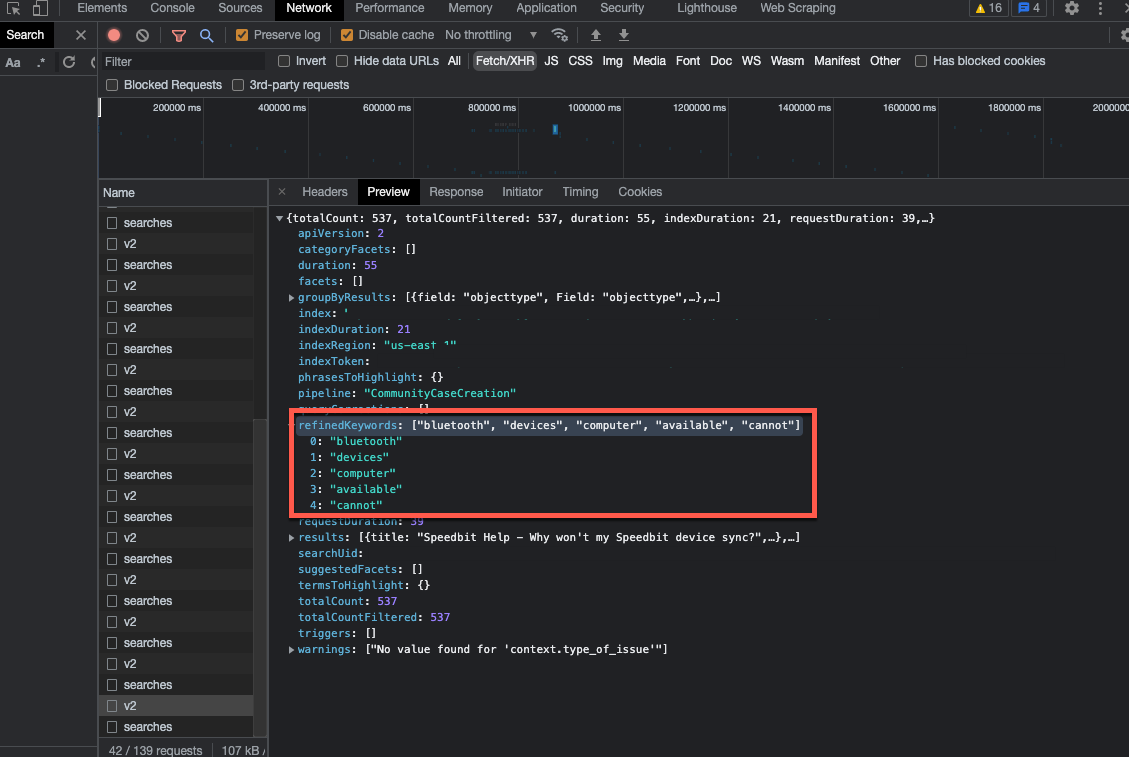

How can I verify that ITD is working properly?

You’ve associated the ART model with the query pipeline to which your search interface traffic is directed. In addition, the Comply with Intelligent Term Detection (ITD) option is activated for this association. To verify whether ITD works as expected:

-

Access the search interface you want to test (typically a case creation form).

-

Open your web browser’s developer tools.

NoteThe examples in this article use the Google Chrome developer tools. For browser-specific information, see:

-

Select the Network tab.

-

Send a query for which the model is able to recommend items.

-

Back in your browser developer tools, under the Name column, select the latest request to the Search API. The request path should contain

/rest/search/v2. -

Select the Preview tab. You should now see the query response body.

-

In the query response body, you should see an expandable

refinedKeywordsproperty. You can expand it to see the keywords extracted from thelqby ITD. If nothing is shown in therefinedKeywordsproperty, it means that ITD didn’t extract keywords from the currentlq.

|

|

Note

When an ART model with the ITD option enabled hasn’t gathered enough data to provide refined keywords, the |

What exactly are the Coveo ML capabilities for Salesforce communities?

Specifically, Coveo ML offers the following model types for Coveo for Salesforce - Experience Cloud Edition:

-

ART to deliver the best results possible on community search results pages (see About Automatic Relevance Tuning).

-

Query Suggestions (QS) to optimize recommended queries presented to users as they type in the Salesforce Community search box (see About Query Suggestions).

-

content recommendations to include "

People who did that also did this" like sections in your websites (see About Content Recommendations). -

Dynamic Navigation Experience (DNE) to order facets and facet values in a relevant way on community search results pages (see Dynamic Navigation Experience).

-

Case Classification to render relevant classification suggestions in support cases.

-

Smart Snippets to provide answers to user queries directly on the search results page.

Does Coveo ML support Coveo for Sitecore?

Yes, Coveo for Sitecore supports Coveo ML when using a Cloud edition. It’s however not available in on-premises installations.

Models

What’s the optimal model data period?

Depending on the model type, the default (recommended) model data period can be either 3 months or 6 months.

These default data periods are optimal for most implementations, typically taking into account a large dataset that covers user behavior trends.

Consider increasing the data period to get better trained recommendations when your search hubs serve less than about 10,000 user queries per month.

Consider reducing the data period when your search hubs serve significantly more than 10,000 queries per month and you want to get fresher or more trending recommendations.

For more information on model training sets, see Training and retraining.

When should I change a model learning interval/training frequency?

The recommended training frequencies for each Data Period value shown in the following table provide optimal results for most implementations. When training a model using a longer Data Period, you can’t retrain the model as frequently.

Data period |

Building frequency |

||

|---|---|---|---|

Daily |

Weekly |

Monthly |

|

1 month |

|||

3 months (Recommended) |

|||

6 months |

|||

Consider increasing the learning interval/training frequency when your search hubs serve at least 10,000 user queries per data period and, for example, your relevant content or your user behavior patterns are changing more frequently and you want recommendations to adapt more rapidly.

Consider reducing the learning interval/training frequency when your relevant content or your user behavior patterns are stable over time.

For more information on model training sets, see Training and retraining.

When exactly is a model retrained?

The Coveo ML service automatically manages the exact date and time at which models are retrained according to the Learning Interval/Training Frequency set for the model. You can’t set a precise retrain schedule date and time and can’t find out when the model was last retrained or the next time it will be retrained.

For more information on model training sets, see Training and retraining.

Model types

How’s Coveo ML deployed?

A Coveo organization administrator can enable and configure Coveo ML models in minutes via the Administration Console (see Manage models). Once configured, the machine learning model will begin building itself automatically.

Can Coveo ML models be tested before they’re activated?

Yes. You can test Coveo ML models before applying them to your query pipeline. By creating an A/B test, you can compare the performance of your current query pipeline with the performance of a new query pipeline that includes the model of your choice.

You can then review the model’s impact on the A/B Test tab of the query pipeline configuration page. Alternatively, you can use the built-in dashboard report and then evaluate the impact by comparing key metrics for both versions of the pipeline (see How do you measure Coveo ML impact?).

What languages do Coveo ML models support?

Coveo ML supports many languages.

As long as the events are used in the model creation, a model contains a submodel for each language that was used by the users performing those events (see Language). Therefore, Coveo ML models support many languages simultaneously and multilingual search (see Coveo Machine Learning models).

|

|

Coveo ML Case Classification and Smart Snippets models currently only provide outputs for index items whose |

Does Coveo ML work on a secured Salesforce community?

Yes. Coveo ML works on communities where users must log in as long as most authenticated users have access to a significant shared body of content so Coveo ML can learn from the crowd.

How long do Coveo ML models take to start improving relevance?

Most Coveo ML model types learn from user interactions on your website. The more events a Coveo ML model has to learn from, the better it will be at providing relevant results. If well implemented, Coveo ML generally reaches its best optimization learning from 25,000 to 100,000 queries (see Coveo Platform implementation guide).

The time to improve relevance therefore depends on the level of search activity on your community and when you started gathering usage analytics data.

How do you measure Coveo ML impact?

You can use the following 2 traditional marketing metrics to evaluate how successfully your community search connects users with the information they need to solve their specific issue:

-

Clickthrough Rate (CTR) — The percentage of users clicking any link on the search results page. Higher values are better.

-

Average Click Rank (ACR) — Similar in concept to page rank, this metric measures the average position of clicked items in a given set of search results. Lower values are better, as a value of

1represents the first result in a list.

Coveo ML optimizes search results and query suggestions, and will therefore improve CTR and ACR metrics and contribute to increase self-service. You can test the addition of a Coveo ML model like any other query pipeline changes (see Manage A/B tests).

Evaluate Automatic Relevance Tuning (ART) impact

You can use usage analytics reports to obtain the percentage of users who clicked search results that were promoted by ART by using the Click Ranking Modifier dimension.

You want to determine the percentage of users who clicked ART-promoted results in a given month. Therefore, you create a usage analytics pie chart card using the Click Ranking Modifier dimension and the Click Event Count metric.

The newly created pie chart card now shows the number and the percentage of clicks made on results promoted by ART.

Evaluate Query Suggestions (QS) impact

You can use usage analytics reports to obtain the number of search events originating from query suggestions by using the Search Cause dimension.

You want to know the number of search events that originated from query suggestions in a given month. Therefore, you create a usage analytics pie chart card using the Search Cause dimension and the Search Event Count metric.

The newly crated pie chart card now shows the total number of search events that occurred for each available search cause.

You can inspect the number of search events that originated from query suggestions by looking at the omniboxAnalytics value.

How exactly does Automatic Relevance Tuning (ART) work?

Coveo ML uses machine learning techniques to analyze the search activity data captured by Coveo Usage Analytics (Coveo UA). Coveo ART tracks what users search for, if and how they reformulate their queries, what results they click, and whether they created a new support case. With this data, ART trains an algorithmic model for predicting which content will be most helpful to future users based upon their specific query. ART regularly retrains its model so that over time it gets better and better and adapts to new trends (see About Automatic Relevance Tuning).

How does ART ensure that the first results aren’t falsely promoted by users clicking them solely because they’re the first results?

ART evaluates user visits as a whole and allocates additional weight (that is, gives a higher score) to the last clicked item rather than the previously clicked ones.

A user performs a query and inspects the returned results:

-

The user clicks the top two items, but these don’t answer their inquiry.

-

The user clicks the three following results, and then closes the search page.

-

ART assumes the fifth result is the best result (that is, answer the user inquiry) since the click on this result is the last user action associated with the search event.

-

As the ART model is rebuilt over time, the fifth result climbs the result list based on the additional weight acquired from being the last clicked item.

|

|

Notes

|

What happens when enabling Usage Analytics and Coveo ML at the same time?

Coveo ML doesn’t recommend search results or query suggestions until sufficient usage analytics data is available. Coveo ML models will start to make recommendations when it’s retrained and will get better and better each time it’s retrained with more data.

Can Coveo ML work with Coveo on-premises?

No. Coveo ML doesn’t work with a full on-premises Coveo deployment (on-premises CES index, usage analytics module, REST Search API). Coveo ML requires Coveo REST Search API and usage analytics.