Troubleshoot Automatic Relevance Tuning models

Troubleshoot Automatic Relevance Tuning models

Coveo Machine Learning (Coveo ML) Automatic Relevance Tuning (ART) models learn from click and search usage analytics events that occurred sequentially during the same visit. Based on those events, a given ART model extracts candidates, which are queries for which the model can recommend at least one item, and provides the most relevant items as top search results.

|

|

Notes

|

|

|

ART requires real data from a specific community to ensure ART results respond to real user intentions. Therefore, it’s strongly NOT recommended to provide false generated data. |

Sending the required usage analytics event data

Items can be used in ART model building only if they’re identifiable from the usage analytics events that pertain to them.

To that end, the contentIdKey and contentIdValue parameters must be present in the customData of the click events on that item.

For technical details on those events, see Log click events.

Reviewing your Coveo ML ART model learning dataset

When you have the required privileges, you can use the Analytics section of the Coveo Administration Console to browse user visits, and therefore evaluate if your search interface produces enough data.

The following procedure assumes that you’re familiar with global dimension filters and Visit Browser features (see Review user visits with the Visit Browser).

-

On the Visit Browser (platform-ca | platform-eu | platform-au) page:

-

Select a date interval of three months (see Review search usage data by date interval).

-

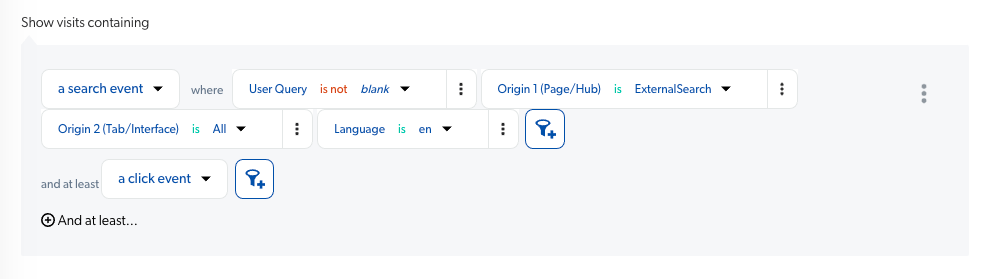

In the Show visits containing section, add the following filters (see Add visit filters):

-

a search event WHERE:

-

Query is not blank or n/a

-

Origin 1 (page/hub) is [

Search page or search hub name] -

Origin 2 (tab/interface) is [

Tab or search interface name] -

Language is [

Language]

-

-

and at least a click event

-

-

-

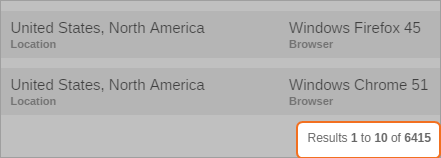

At the lower right of the screen, you can see the number of visits in the selected period from which an ART model could learn.

Training ART models by linking queries to results

When leveraging Coveo ML, manually adjusting the relevance of search results (for example, creating query pipeline rules) becomes less necessary. For example, you no longer have to create thesaurus rules since ART models recommend the same results for different queries when they contain synonyms simply by analyzing the usage analytics data of a specific search interface. You can help accelerate the learning process of an ART model by linking queries to results such as pointing synonyms as well as words behind acronyms.

|

|

Notes

|

To help train Coveo ML ART models by linking queries to results

-

Ensure that Coveo ML ART is configured and enabled on your search interface (see Create an Automatic Relevance Tuning model).

-

On your search interface, perform a query, and then click the result you want to be recommended.

-

Repeat the procedure for each desired query.

-

Once the model is trained, on the Content Browser (platform-ca | platform-eu | platform-au) page, ensure that the model returns the expected result (see Inspect items with the Content Browser). Repeat the procedure if needed.

-

Remove any thesaurus rules once the data period for training your ART model has expired. This is recommended as not doing so can cause the wrong results to be returned in response to users' queries, as demonstrated by the example below:

ExampleDuring a shopping session, a customer typically browses through multiple items after performing a query.

Taking advantage of this, you might want to promote a related item that was not searched for in the user’s query. An instance of this could be setting up a thesaurus rule which includes search results for

sports socksevery time a user searches forrunning shoes.Since ART learns from searches and clicks to boost search results, the model will establish a relation between the query

running shoesand index items representingsports socksthat were clicked after the user searched forrunning shoes.After your ART model has been trained, you should remove this thesaurus rule. Not doing so will result in including all the index items representing

sports socksin your search results when the user queries forrunning shoes. This is not desirable as only those items representingsports socksthat were clicked on after searching forrunning shoesshould be included in the search results.Notes-

After the next (scheduled) model update, your model will recommend results based on your training.

-

The search results for queries that include the terms you created the rule for will remain the same even after you remove the thesaurus rules (unless the Match the query option was selected when associating the model). To ensure that this is the case, you can test your ART model by comparing results when it’s associated with a query pipeline that contains the thesaurus rules with a query pipeline that doesn’t.

-