Test models

Test models

|

|

This feature will be deprecated in November 2024. Alternatively, you can quickly create a hosted search page to test Automatic Relevance Tuning (ART) and Query Suggestions (QS) models. You can also use the Relevance Inspector for additional ranking and relevance details. |

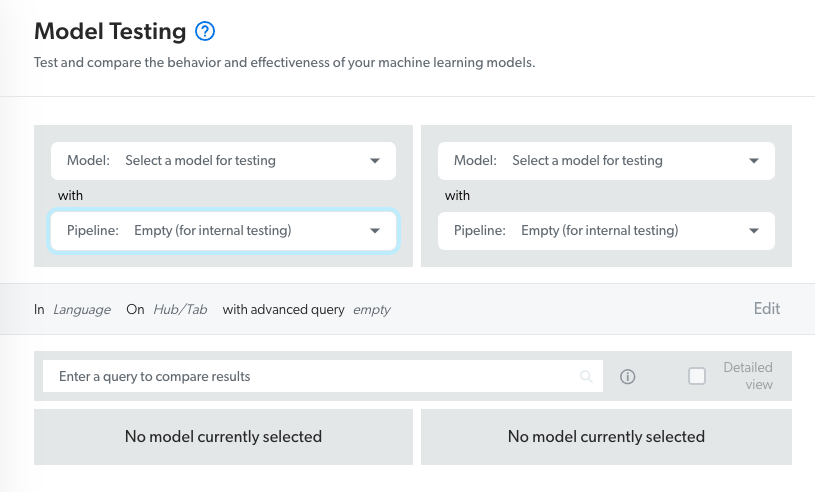

When you have the required privileges, you can use the Model Testing (platform-ca | platform-eu | platform-au) page of the Administration Console to compare two Coveo Machine Learning (Coveo ML) models of the same type together or compare an ART model with the default ranking by performing queries and reviewing the returned results.

|

|

Notes

|

Prerequisites

To take advantage of the Model Testing page, your Coveo organization must contain at least:

-

Two active QS models

OR

-

One active ART model

Test machine learning models

-

Access the Model Testing (platform-ca | platform-eu | platform-au) page.

-

In the Model dropdown menu on the left, select an active machine learning model. If the model you want to test is grayed and irresponsive, it means that the model isn’t in an Active state. See “Status” column for more information on model statuses.

-

In the Pipeline dropdown menu on the left, optionally change the Empty query pipeline for a pipeline for which you want to test the results a pipeline-model combination would provide if associated together (if not already the case).

-

In the on the right, select the pipeline-model combination that you want to compare with the one previously selected.

In the Model dropdown menu, depending on the first selected model:

-

If you selected an ART model, select another active ART model or the Default results (index ranking only) option. The Default results (index ranking only) section returns results based on the default ranking score only (no query pipeline rules are applied).

-

If you selected a QS model, select another active QS model.

-

-

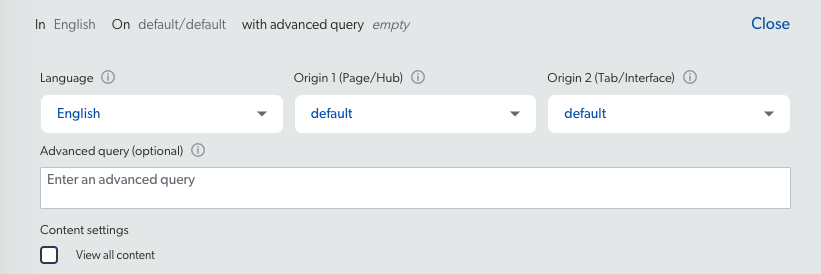

(Optional) Click Edit to show additional parameters, and then modify the default values (see Additional parameters reference).

Notes

Notes-

The additional parameters only impact the results returned by the models, meaning that the parameters don’t affect the results returned by the index (when the Default results (index ranking only) option is selected).

-

Model conditions and training dataset can be impacted by the advanced parameter values.

For example, if model A is only applied to the community search hub, and you select the case creation page in the Origin 1 (Page/Hub) dropdown menu, model A wouldn’t return results.

-

-

In the search box, enter a test query, and then select Enter or click Search.

-

(For ART model testing only) When you want to review the ranking weights of all returned search results, select the Detailed view checkbox OR click the result card to review the ranking weights of a particular result (see Detailed View reference).

|

|

Leading practice

|

Reference

Additional parameters

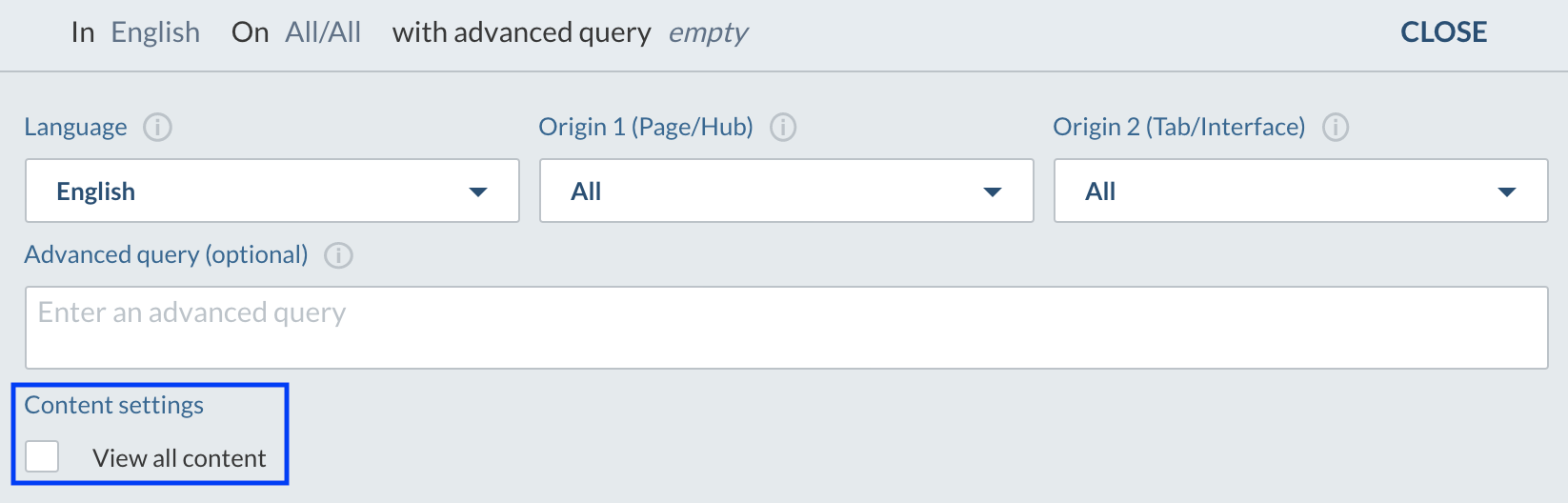

Language

Under Language, select the language in which the tested models will recommend results. The default value is English.

|

|

Note

When the selected models are built with data in many languages, only the languages shared by both selected models are selectable (if any). |

Origin 1 (Page/Hub)

Under Origin 1 (Page/Hub), select the search hub or page from which the tested models will recommend results, or select None if you don’t want to filter on a specific search hub.

|

|

Notes

|

Origin 2 (Tab/Interface)

Under Origin 2 (Tab/Interface), select the search tab or interface from which the tested models will recommend results, or select None if you don’t want to filter on a specific tab or interface

|

|

Notes

|

Advanced Query

In the Advanced Query box, optionally enter an advanced query expression including special field operators to further narrow the search results recommended by the tested models (see Advanced field queries).

(@audience==Developer)

Content Settings

When you’re a member of the Administrators built-in group (having the View all content privilege enabled), by default you’ll only see search results for source items that you’re authorized to see. This means that you may not see some items of a source or even entire sources.

As a member of the Administrators group, to allow you to troubleshoot search issues, you can temporarily bypass these item permissions by selecting the View all content checkbox.

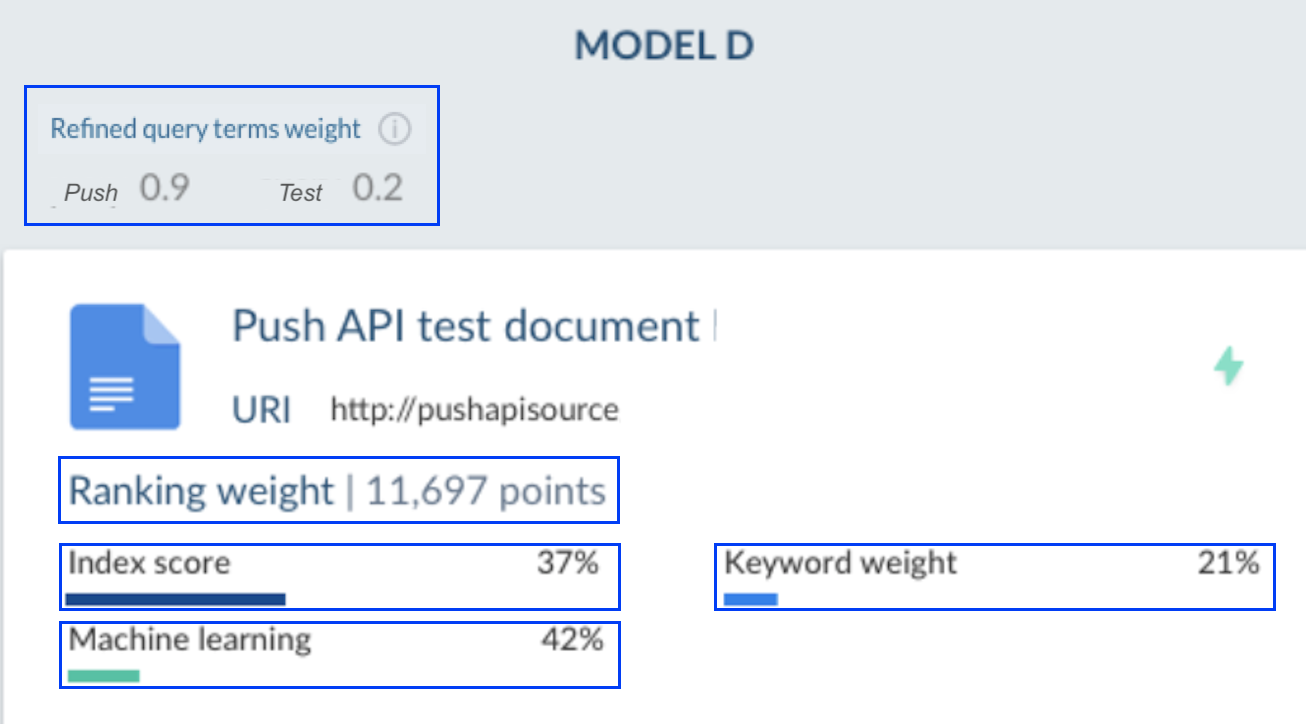

Detailed View

When this option is selected, you can visualize the following information:

Ranking Weights

The total weight of the selected item.

Refined Query Terms Weight

The score given by the ART model to the most relevant queried keywords.

Index Score

The score proportion given by the ranking factors such as the item last modification date and the item location used by the index to evaluate the relevance score of each search result for each query (see About ranking).

Machine Learning

The score proportion given by the ART model based on the ranking modifier set in the model configuration, and the end-user query and search result click behavior.

Keyword Weight

The score proportion given by language computing, which evaluates the presence of query terms in item titles and descriptions.

Required privileges

The following table indicates the required privileges for members to test Machine Learning models using the Model Testing (platform-ca | platform-eu | platform-au) page (see Manage privileges and Privilege reference).

| Action | Service - Domain | Required access level |

|---|---|---|

Test models |

Machine Learning - Models |

View |

Search - Execute queries |

Allowed |