About Coveo Passage Retrieval (CPR)

About Coveo Passage Retrieval (CPR)

|

|

Passage Retrieval (CPR) is a paid product extension. Contact Coveo Sales or your Account Manager to add CPR to your organization license. |

A Coveo Machine Learning (Coveo ML) Passage Retrieval (CPR) model retrieves the most relevant segments of text (passages) from your dataset for a natural language user query that goes through a Coveo query pipeline.

The purpose of a CPR model is to retrieve the passages that will be used by your enterprise’s retrieval-augmented generation (RAG) system to enhance your large language model (LLM)-powered applications. This is done by using the Passage Retrieval API during the retrieval process of your RAG system.

Therefore, in your RAG system implementation, the Passage Retrieval (CPR) model retrieves the most relevant passages, and the Passage Retrieval API provides the passages to your enterprise RAG system.

|

|

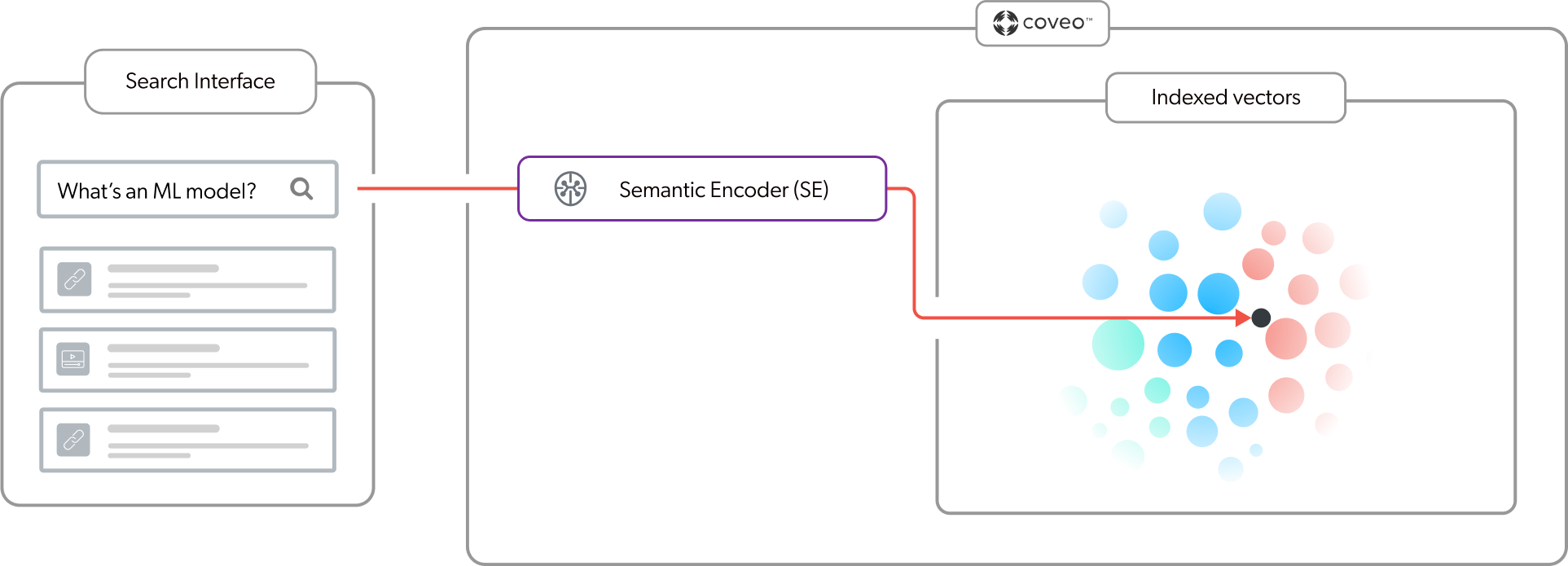

Your CPR implementation must also include a Semantic Encoder (SE) model. An SE model uses embeddings and vector search to retrieve the items in the index with high semantic similarity with the query. This ensures that the most relevant passages are retrieved. |

|

|

By default, content retrieval is supported only for English content. However, Coveo offers beta support for content retrieval in languages other than English. Learn more about multilingual content retrieval and answer generation. |

Why you need a CPR model

The success of any RAG system depends on the quality and accessibility of the underlying data. The challenge lies not just in retrieving this data but in making sure the data is up to date, contextually relevant, and secure.

When you add a CPR model to a Coveo Platform implementation, it augments an LLM’s generative abilities by grounding the LLM with relevant and personalized content.

The CPR model works with Coveo’s existing indexing, AI, personalization, recommendation, machine learning, relevance, and security features. The CPR model retrieves passages only from the content that you specify, and the content resides in a secure index in your Coveo organization.

The passages retrieved by the CPR model are in a format that you can use across all your RAG system implementations.

In short, by using a CPR model, you’re leveraging the power of the Coveo Platform and its AI search relevance technology to feed your custom RAG system LLM applications with relevant and secure enterprise content.

CPR overview

Your CPR implementation must also include a Semantic Encoder (SE) model. This section provides a high-level look at how CPR and SE work together in the context of a user query to retrieve passages. This article will take a closer look at the main CPR processes later.

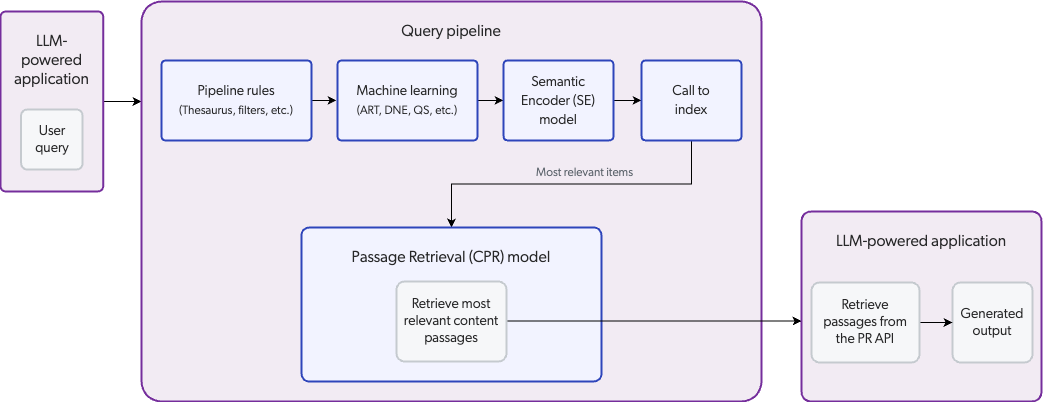

The following steps describe the passage retrieval flow as shown in the above diagram:

-

A user enters a query in an LLM-powered application configured for CPR.

-

As it normally does, the query passes through a query pipeline where pipeline rules and machine learning are applied to optimize relevance. However, the Semantic Encoder (SE) model adds vector search capabilities to the LLM-powered application in addition to the traditional lexical (keyword) search. Vector search improves search results by using embeddings to retrieve the items in the index with high semantic similarity with the query.

-

The search engine identifies the most relevant items in the index, and sends a list of the items to the CPR model. This is referred to as first-stage content retrieval.

-

The CPR model applies chunking to retrieve the most relevant passages from the items identified during first-stage content retrieval. This is referred to as second-stage content retrieval.

-

The LLM-powered application leverages the PR API to retrieve the passages identified by the CPR model.

-

The LLM generates an output based on the passages retrieved from the PR API and returns it to the application for display to the user.

CPR processes

Let’s look at the CPR feature in more detail by examining the two main processes that are involved in passage retrieval:

Embeddings

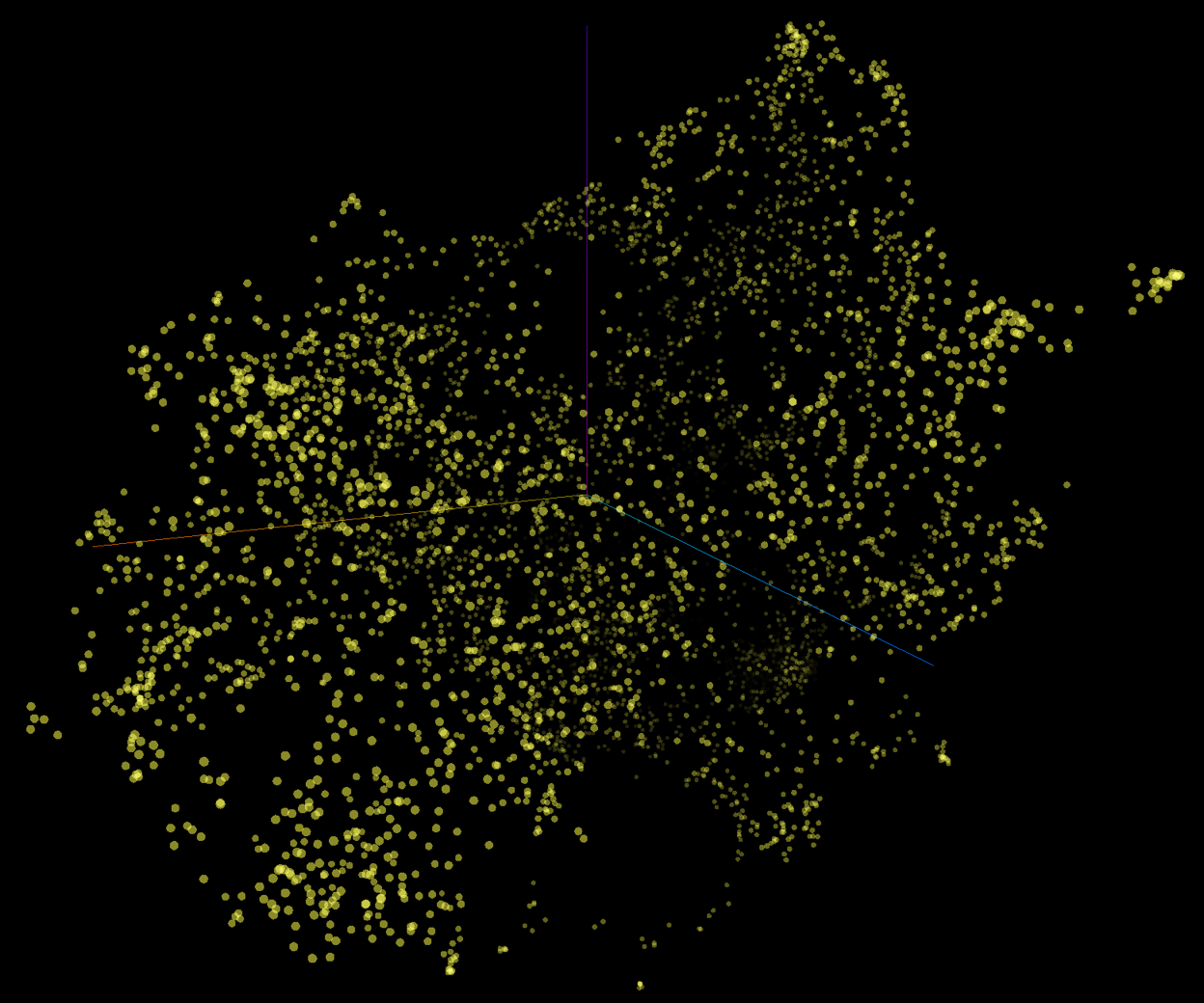

Embedding is a machine learning technique used in natural language processing (NLP) to convert text data into numerical representations (vectors) mapped to a vector space. The vector space can represent the meaning of words, phrases, or documents as multidimensional points (vectors). Therefore, vectors with similar meaning occupy relatively close positions within the vector space. Embeddings are at the core of vector-based search, such as semantic search, that’s used to find similarities based on meaning and context.

The following is a graphical representation of a vector space with each dot representing a vector (embedding). Each vector is mapped to a specific position in the multi-dimensional space. Vectors with similar meaning occupy relatively close positions.

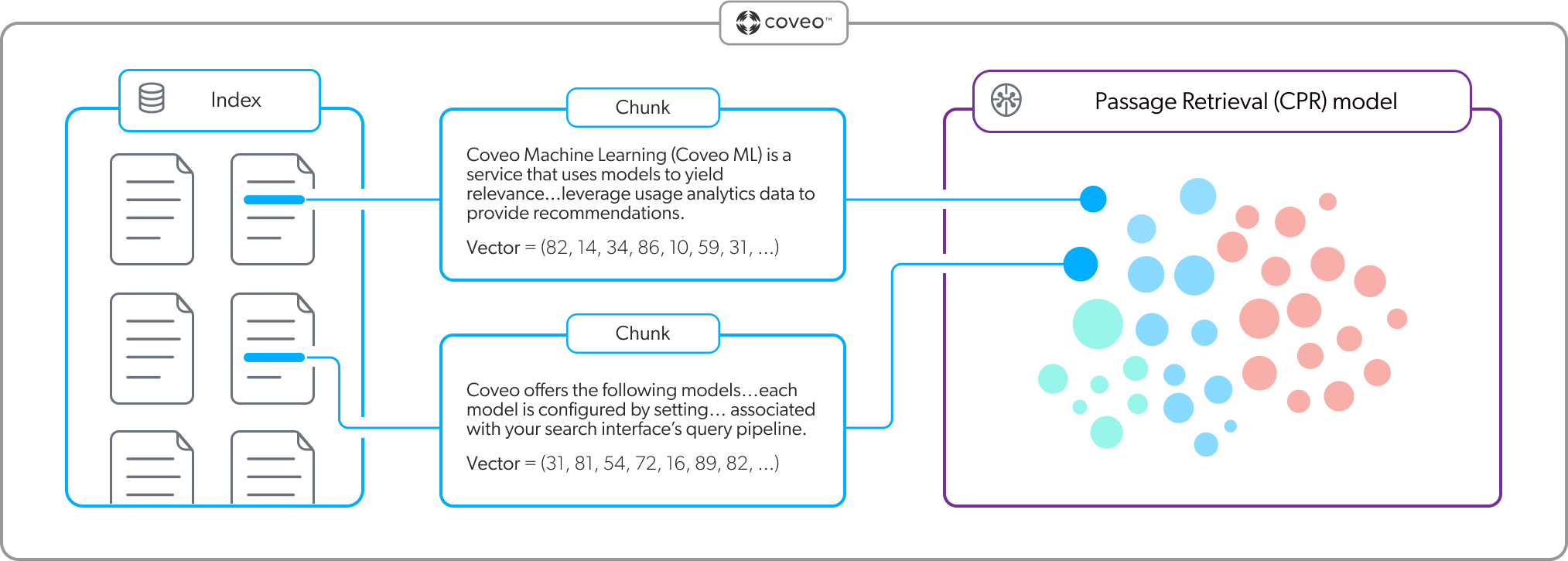

A CPR implementation must include both a Passage Retrieval (CPR) model and a Semantic Encoder (SE) model. Each model creates and uses its own embedding vector space. The models have the dual purpose of creating the embeddings, and then referencing their respective vector spaces at query time to retrieve relevant content. The SE model uses the embeddings for first-stage content retrieval, and the CPR model uses the embeddings for second-stage content retrieval.

The CPR and SE models use a pre-trained sentence transformer language model to create the embeddings using chunks. Each model creates the embeddings when the model builds, and then updates the embeddings based on their respective build schedules. The CPR model stores the embeddings in model memory, while the SE model stores the embeddings in the Coveo unified index. For more information, see What does a CPR model do? and What does an SE model do?.

Chunking

Chunking is a processing strategy that’s used to break down large pieces of text into smaller segments called chunks (passages). Large language models (LLMs) then use these chunks to create the embeddings. Each chunk is mapped as a distinct vector in the embedding vector space.

CPR and SE models use optimized chunking strategies to ensure that a passage contains just enough text and context to be semantically relevant.

When a CPR or SE model builds, the content of each item that’s used by the model is broken down into passages that are then mapped as individual vectors. An effective chunking strategy ensures that when it comes time to retrieve content, the models can find the most contextually relevant items based on the query.

|

|

Note

You can choose the chunking strategy that the CPR model uses to create the chunks. |

Relevant content retrieval

When using generative AI to generate text from data, it’s essential to identify and control the content that will be used as the data.

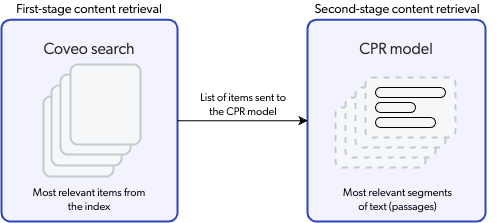

To ensure that CPR retrieves content based on the most relevant data, the process involves two layers of content retrieval.

|

|

Note

Your enterprise content permissions are enforced during content retrieval. This ensures that an output generated from the retrieved content only shows the information that the authenticated user is allowed to access. |

-

First-stage content retrieval identifies the most relevant items in the index.

-

Second-stage content retrieval identifies the most relevant passages from the items retrieved during first-stage content retrieval.

|

|

By default, content retrieval is supported only for English content. However, Coveo offers beta support for content retrieval in languages other than English. Learn more about multilingual content retrieval and answer generation. |

First-stage content retrieval

The initial content retrieval occurs at the item level, where the Coveo search engine finds the most relevant items in the index for a given query. When a user performs a query in an application that leverages CPR, the query passes through a query pipeline where rules and machine learning are applied to optimize relevance. When first-stage content retrieval is complete, the search engine sends a list of the most relevant items to the CPR model. The CPR model takes only these most relevant items into consideration during second-stage content retrieval.

Because the CPR model relies on the effectiveness of first-stage content retrieval, incorporating a Semantic Encoder (SE) model into your LLM-powered application ensures that the most relevant items are available for CPR. Given that user queries for passage retrieval are typically complex and use natural language, retrieving passages based on lexical search results alone wouldn’t necessarily provide contextually relevant passages. An SE model uses vector-based search to extract the meaning in a complex natural language query. As shown in the following diagram, when a user enters a query, the SE model embeds the query into an embedding vector space in the index to find the items with high semantic similarity with the query. This means that search results are based on the meaning and context of the query, and not just on keywords. For more information, see About Semantic Encoder (SE).

Second-stage content retrieval

With first-stage content retrieval complete, the CPR model now knows the most contextually relevant items in the index to which the user has access. The next step is to identify the most relevant passages (chunks) from those items. The passages will be used as the data from which the response will be generated.

The CPR model uses the embeddings vector space in its memory to find the most relevant passages from the items identified during first-stage retrieval. As shown in the following diagram, at query time the CPR model embeds the query into the vector space and performs a vector search to find the passages with the highest semantic similarity with the query.

Second-stage content retrieval results in a collection of the most relevant passages from the most relevant items in the index. Your LLM-powered application can now retrieve the most relevant passages from the CPR model using the Passage Retrieval API to generate its output.

|

|

Note

You can set a custom value for the maximum number of items that the CPR model considers when retrieving the most relevant segments of text (passages). This is an advanced model query pipeline association configuration that should be used by experienced Coveo administrators only. For more information, see CPR model association advanced configuration. |

What’s next?

Implement CPR in your Coveo organization.