Evaluate generated answers

Evaluate generated answers

|

|

The Coveo Knowledge Hub is currently available as a beta to early-access customers only. Contact your Customer Success Manager for early access to this feature. |

Evaluating the answers that are generated by Relevance Generative Answering (RGA) is a crucial part of your RGA implementation, just as with any generative answering system.

At a basic level, RGA relies on data and algorithms to generate answers. Evaluating the answers that are generated for specific user queries helps you assess the effectiveness of your RGA implementation in relation to your dataset. Evaluating the generated answers is an important step when testing your RGA implementation in a pre-production environment. Post-production evaluations are equally valuable, as they help you fine-tune and improve your implementation as your dataset evolves.

How to evaluate generated answers

How does one effectively evaluate a generated answer? Providing and gathering feedback on a generated answer’s perceived correctness, relevance, and usefulness is a good first step, but it doesn’t offer insights that you can use to improve your generative answering implementation. For example, if a generated answer is flagged as incorrect or irrelevant, troubleshooting the issue would be difficult without a more in-depth analysis of how the answer was generated. The same applies to a generated answer that’s considered correct but not based on the most relevant or up-to-date content.

An effective answer evaluation strategy, therefore, should include not only a review of the generated answer but also an examination of the segments of text (chunks) that were used to generate that answer.

|

|

You’re here

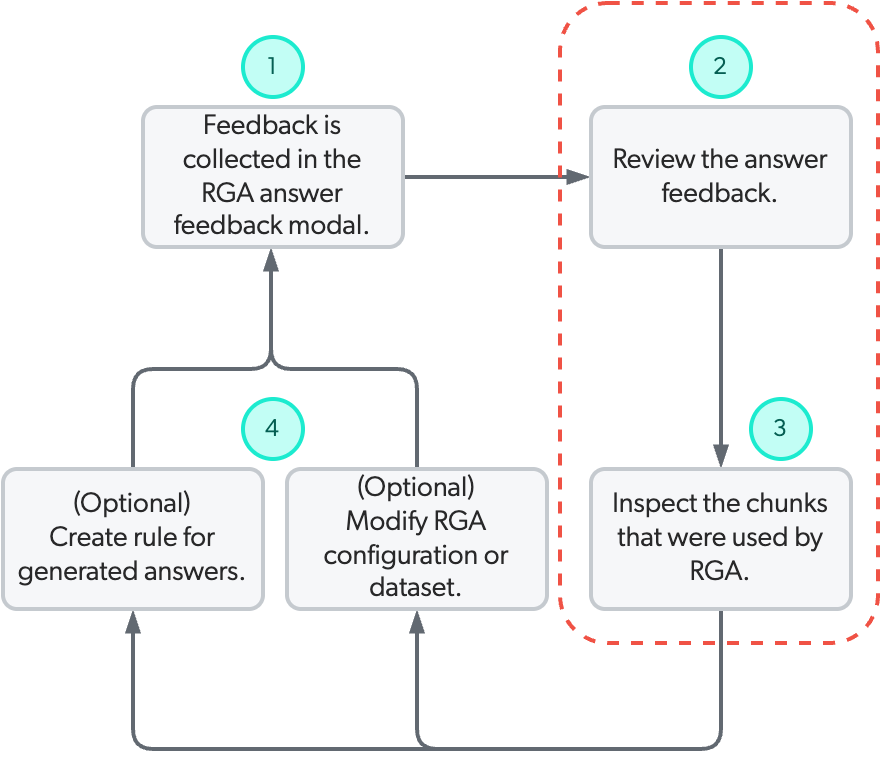

In the improving your RGA implementation based on answer evaluation workflow, an effective answer evaluation strategy includes reviewing the feedback to identify the answers that need your attention, and inspecting the chunks that were used to generate the answers.

|

Use the following Knowledge Hub tools to evaluate your RGA-generated answers:

-

Use the Answer Manager to review the user feedback collected for the generated answers.

For feedback to appear in the Answer Manager, you must associate an answer configuration with your RGA-enabled search interface.

NoteFeedback is collected when the thumbs-up or thumbs-down icon is clicked in the RGA component in the search interface. Feedback is typically provided by search interface users, but it can also be provided by a subject matter expert in your organization tasked with testing the RGA implementation.

-

Use the Chunk Inspector to inspect the chunks that RGA used to generate a specific answer. This is especially useful when evaluating answers with negative feedback or answers that have been flagged as needing improvement.

|

|

Based on the insights you gain from reviewing the feedback and chunk information, you can then choose to create answer rules, modify your content, and modify your RGA implementation configuration to improve your RGA implementation. |