Product embeddings and vectors

Product embeddings and vectors

A product embedding is a machine learning procedure where products are assigned positions in a space. Similar products are close to each other, while products that are different are far from each other.

Each product’s position in the space is called its product vector. The embedding procedure can be utilized in many ways by Coveo Personalization-as-you-go (PAYG) models, and the resulting relationships between products can be used to extract subtle information about products and provide personalization in search results.

Traditional versus new approach to vectors

Traditional grouping of similar products relies on manual assignment or tagging with categories, brands, sizes, or colors, then recommending based on these predefined dimensions. This method is limited in determining which products are similar and complementary to one another, and this is where product embeddings are useful.

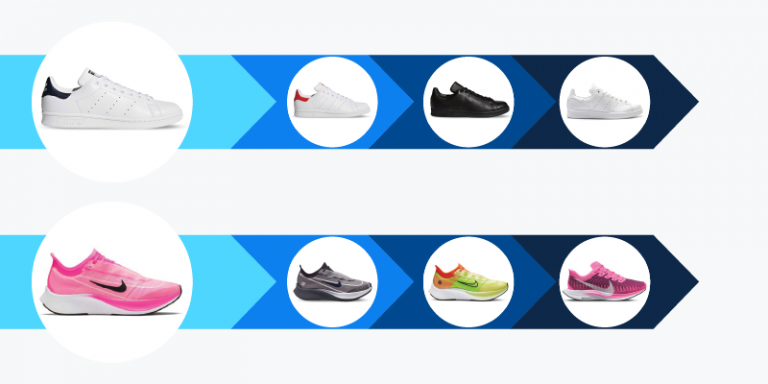

The main reasoning behind embeddings is that products which are “related” tend to appear in the same browsing sessions. For example, when a visitor is training for a marathon, they might browse for running shoes and other related products in the same session.

A digital commerce experience is made up of user sessions, which are made up of different products. Browsing can be a sequence of events, going from product A to B.

A user session vector is a representation of the products a user has recently viewed. Comparing the user’s session vector to product positions in the precomputed embedding space enables the prediction of short-term interests, leading to recommendations or boosted products in search results.

The previous plot is a 3-dimensional representation of a high-dimensional product embedding. When the plot image stabilizes, distinct clusters of products become easily identifiable. Machine learning product embedding algorithms are a form of a deep learning model. They automatically capture subtle aspects of a product, such as the related sport, gender and style, based purely on user behavior, customer data, and product information, which can power many use cases in commerce at scale. In addition, the Coveo Platform uses catalog data to augment those vectors even further.

Personalized search

The offerings of Coveo Personalization-as-you-go use product vectors as building blocks across all models. Once the product embeddings are computed, we can take a user’s product preferences into account to provide personalized search results along the customer journey.

Again, returning to the initial example when browsing for running shoes.

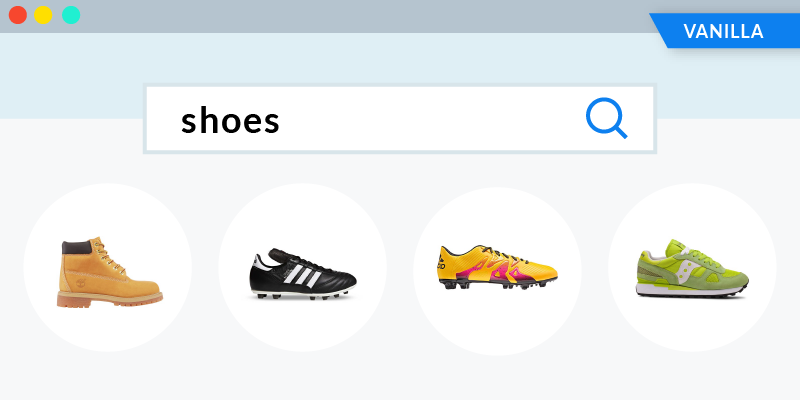

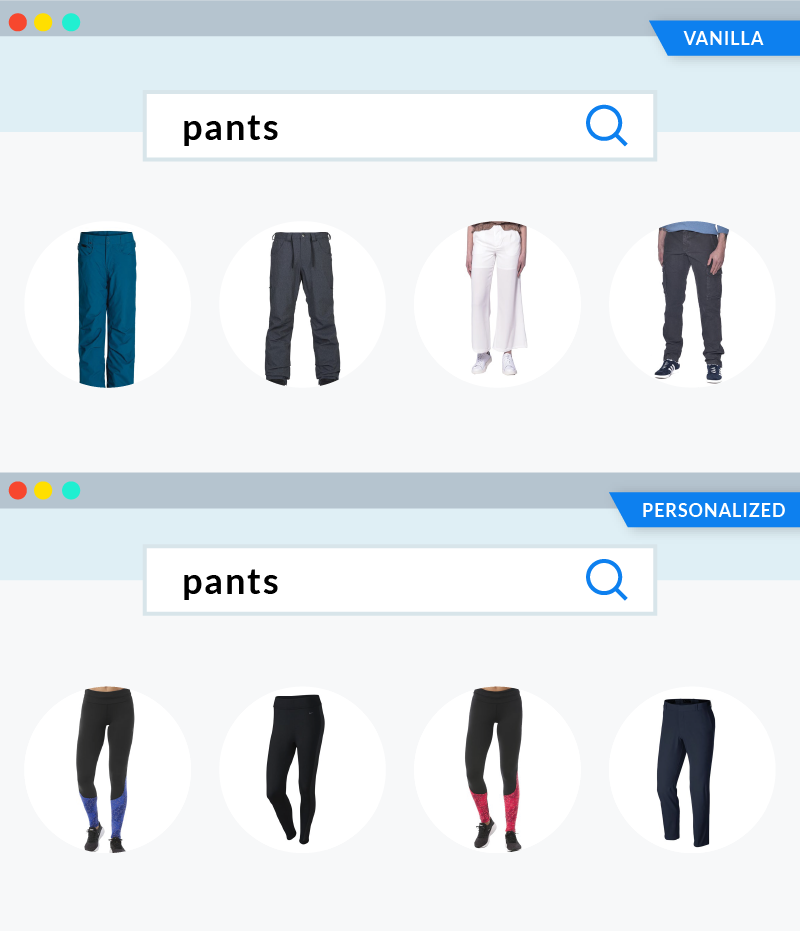

When querying for shoes in the vanilla, non-personalized scenario, the results returned are good but completely removed from the “running shoe” theme mentioned before.

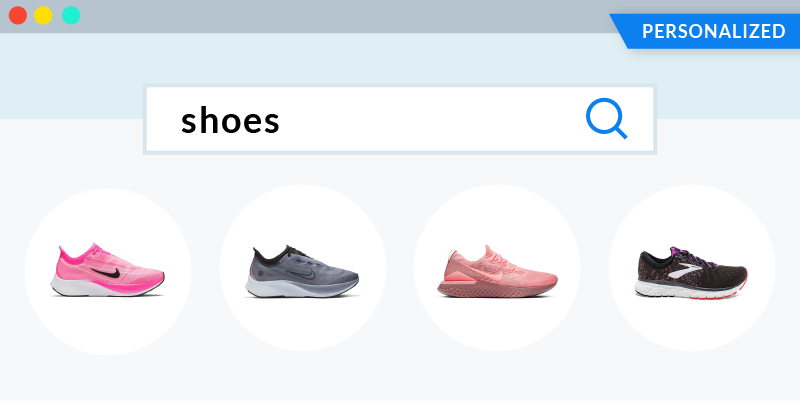

By introducing the session vector of a user specifically interested in sports into the ranking mix, results become much more relevant to running shoes.

Embeddings can also capture the intent of the user through queries not directly related to the products seen in the session.

Continuing from the previous example, when a customer searches for pants after browsing for running shoes, the difference between a vanilla approach (showing various pant styles) and personalized one (displaying running-related pants) is impressive.

It highlights the search engine’s ability to capture the "running" theme.

Personalized search is a key component of the customer journey and of product deployments that work reliably at scale. Two sources of data are required to leverage personalized search:

-

Begin by gathering your data and integrating your products into a commerce catalog.

-

Log commerce events to get an exact account of a user’s interaction with elements of your ecommerce site, by embedding user data within the products.

Embeddings and vectors are utilized in the following Coveo ML models: Predictive Query Suggestions (PQS), Intent-Aware Product Ranking (IAPR), and Session-Based Product Recommendations (SBPR).

About the Cold Start model

With traditional algorithms, product vectors are generated based on customer interactions with the different products in your commerce catalog. This means that the more interactions a product has, the more accurate its vector representation will be.

But what if a product has none or few interactions? The vector generation process integrates a Cold Start model to address this issue. This optimized process allows the usage of product’s metadata to build its vector representation and place it accurately within the vector space. For more information, see About the Cold Start model.