Troubleshoot Placement health

Troubleshoot Placement health

In this article, you’ll learn how to troubleshoot any issues detected with your Placements and will also be provided practical steps to resolve those issues.

The importance of Placement health

The health of your Placements directly impacts the ability of campaigns to reach your customers. Ensuring your Placements are in good health is therefore vital to delivering a return on your personalization efforts.

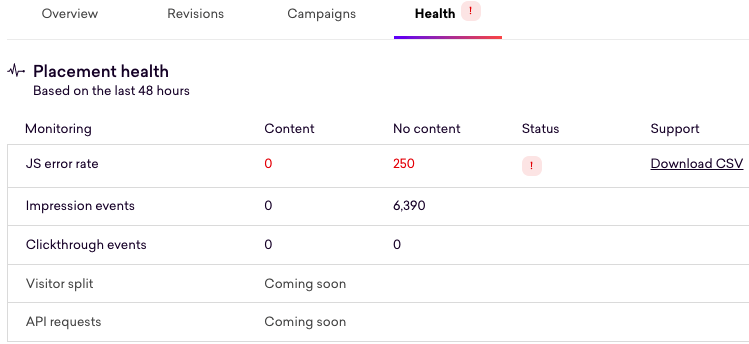

To help self-manage health, you can use the newly "Health" tab, which you’ll find when viewing a Placement, as shown in the following example:

JavaScript errors

The higher the number of JavaScript errors, the less likely a campaign is rendering correctly on your site. In addition, a high number of JavaScript errors may mean that the Coveo Experience Hub doesn’t correctly receive and report on impression and clickthrough events, you’ll be under-reporting the success of a campaign if the Coveo Experience Hub isn’t receiving all the events your Placement should be collecting.

In the example above, you can see an error state raised against the Placement, with an error rate of 250 per 1000 impressions.

The Experience Hub reports on the overall state of a Placement and provides a visual cue, in the form of a toast notification, when the number of JavaScript errors has surpassed a certain threshold over the last 48 hours.

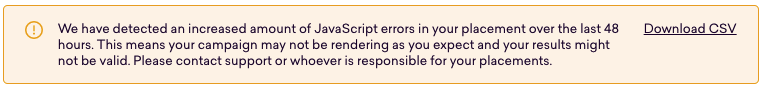

When errors per 1000 impressions are above five and below 50, a caution state will be raised:

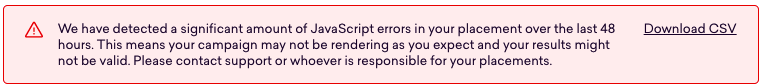

When errors per 1000 impressions are above 50, an error state is raised:

When errors per 1000 impressions are below five, the Placement is considered healthy.

JavaScript error rate

The Experience Hub calculates the JavaScript error rate by dividing the number of JavaScript errors by the number of Placement impressions by 1000. This can also be interpreted as "the number of errors detected per 1000 impressions."

|

|

Note

This rate can exceed 1000 in cases where we see more errors than reported Placement impressions and indicates an error in the Placement code that occurs before the impression can be reported. |

This metric is calculated based on data over the last 48 hours or since the latest Placement revision was launched, whichever is earliest.

Troubleshooting JavaScript errors

To eliminate these errors, we recommend that you adhere to the following process:

-

Download the CSV file from the toast notification or the health table

-

Analyze the "message" field to see which issues are occurring most often

-

If the "message" field doesn’t identify the error, the "hasContent" field and several "sample" fields may help narrow down the conditions needed to reproduce it.

The error CSV has the following schema:

| Field | Definition |

|---|---|

date |

The date of the errors |

placementId |

The Placement’s unique identifier |

campaignId |

The campaign’s unique identifier, when available |

implementationId |

The Placement implementation’s unique identifier |

hasContent |

Whether the error was emitted when content was returned by the Content API |

origin |

Origin of the error |

sampleUrls |

A sample of up to 5 URLs where the errors are emitted |

sampleDevices |

A sample of up to 5 named devices emitting the errors |

sampleOs |

A sample of up to 5 operating systems emitting the errors |

sampleApp |

A sample of up to 5 browsers or applications emitting the errors |

message |

The error message, as seen in the Javascript console |

count |

The number of errors |

|

|

The same issue will typically be reported differently by different browsers or devices. |

Previous revisions

Errors from previous revisions aren’t currently made available in the UI.

If necessary, you can query errors from previous revisions of a Placement in BigQuery in the event_qubit_placementError table.

Missing impression events

Impression events are used to report the number of visitors to your site that were eligible to see the content rendered by your Placement and are vital to measuring the impact of a campaign.

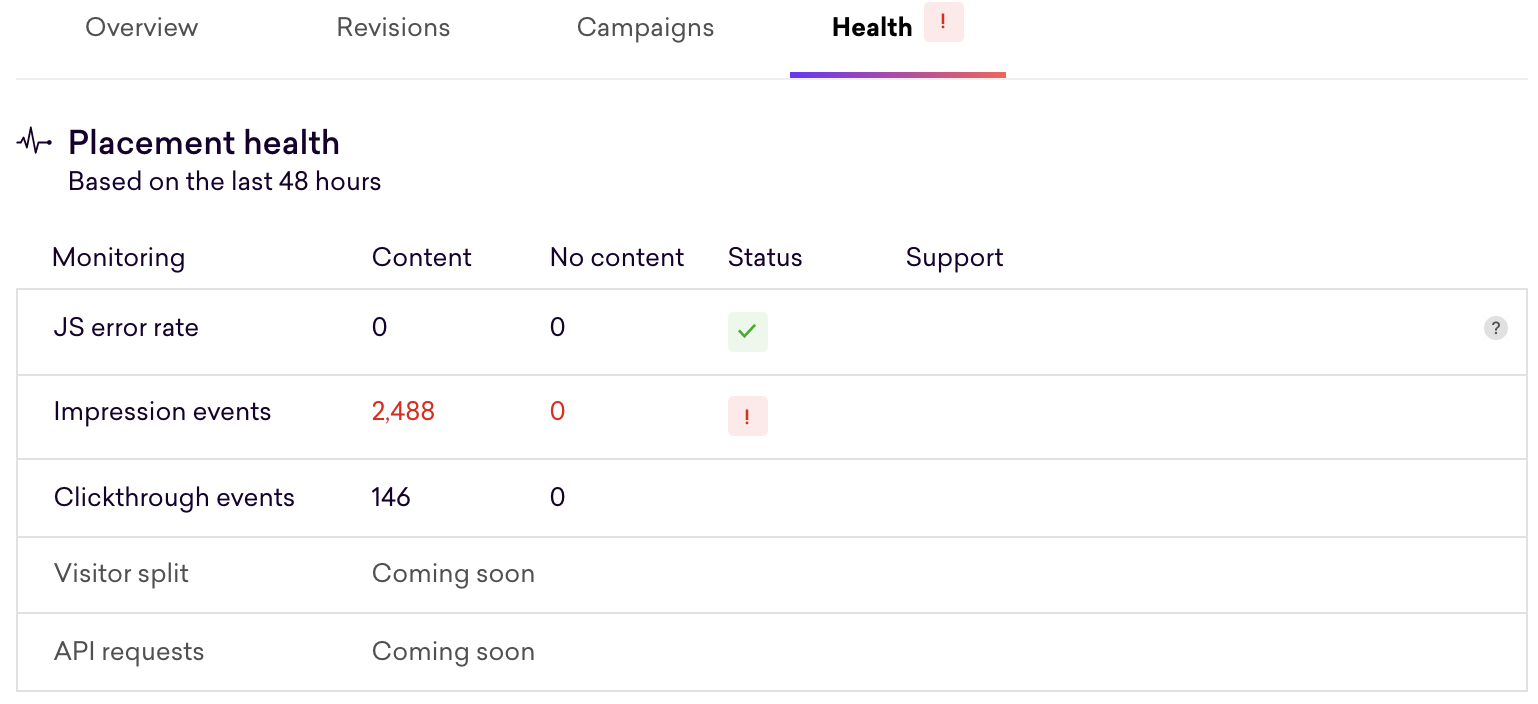

In the "Health" tab, you can see the report containing the number of impression events emitted, split by "Content" and "No content." When the impression event count is 0 and expected to be higher (see below), a toast notification will be added to highlight the issue:

and show an error state:

In the example above, there were no JS errors detected and hence the Experience Hub reports the health as good for this data point. However, in the case where there are no impression events detected due to there being no content, a flag is raised with regards to the health.

Our expectations around the emission of impression events change depending on how the Placement is used. Refer to the following use cases:

-

If no active campaigns are using the Placement, the report will only include missing impressions when the Content API doesn’t return any content.

-

If an active campaign is using the Placement and the traffic allocation is weighted in favor of the control, for example, a custom traffic split with less than 50% visibility, the report will only include missing impressions when the Content API returns content and when it doesn’t.

-

If an active campaign is using the Placement and has a 100% traffic allocation or using the replace control feature, the report will only include missing impressions when the Content API returns content.

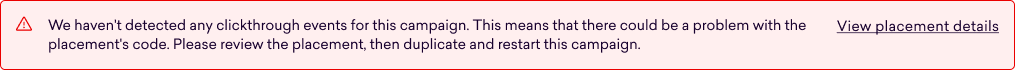

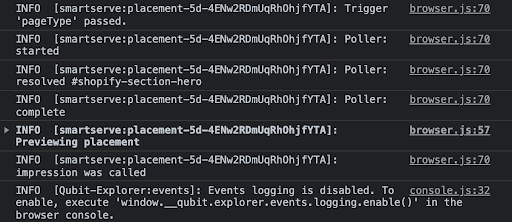

Missing clickthrough events

In the "Health" tab, you can see the number of clickthrough events emitted being reported. They’re split into "Content" and "No content." When the clickthrough event count is 0 and expected to be higher (see below), a toast notification will be added to highlight the issue:

and show an error state:

In the example above, there were no JS errors or missing impression events detected. As a result, the report of the health is concluded to be good for these two data points. However, in the case where there are no clickthrough events detected due to there being no content, a flag is raised with regards to the health.

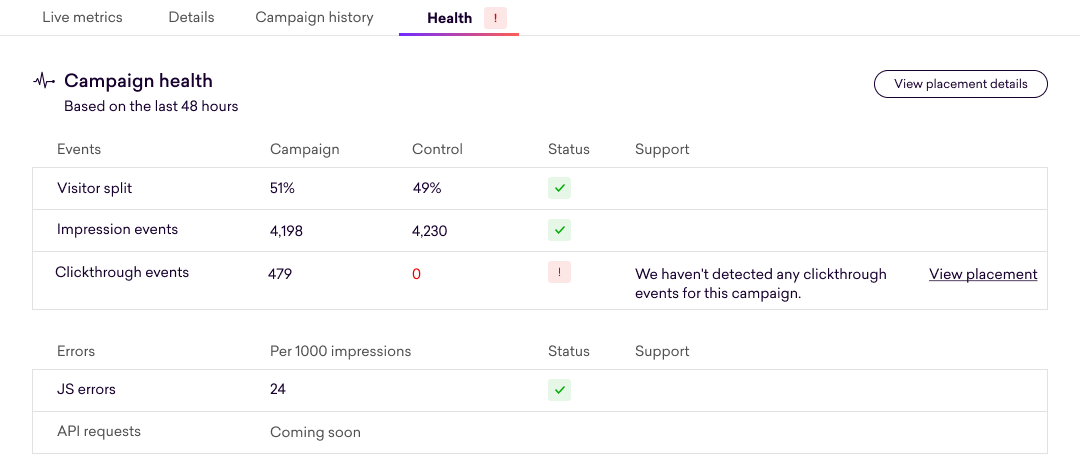

Configuring clickthrough health tracking

Not all Placements are clickable, so you must configure each Placement to track clickthrough events. A toast notification will prompt you to set this configuration if it’s missing.

In particular, the developer implementing the Placement must specify whether the Placement is expected to fire clickthrough events. Two scenarios need to be accounted for:

-

When content is given to the Placement

-

When no content is given to the Placement (that is, the visitor is a member of a non-targeted audience or the control group)

You can configure each Placement to track clickthrough events in the "Health tracking" under Settings:

The default configuration for each Placement type is as follows:

-

product recommendations Placements are automatically enabled

-

The Placement should expect clicks by default (typically, when the user interacts with the carousel)

-

-

product badging Placements are automatically disabled

-

The Placement should expect no clicks by default (these Placements are typically not clickable). However, if the number of clicks is nonzero, no issue is raised.

-

-

personalized content Placements aren’t set by default

-

Configuration must be done on a Placement-by-Placement basis

-

Reporting clickthrough events

Assuming that you’ve configured a Placement to track clickthrough events, and depending on how the Placement is being used, the standard used to measure against will vary:

-

If no campaigns are live, the report will only include missing clicks when content isn’t not given to the Placement.

-

If a campaign is live and has an allocation towards the control, the report will only include missing clicks in both cases of content / no content given to the Placement.

-

If a campaign is live at 100% allocation or using the replace control feature, the report will only include missing clicks when content is given to the Placement.

Troubleshooting missing clicks

-

Determine whether your Placement ought to be tracking clickthrough in the first place:

-

If your Placement isn’t clickable, it may be sufficient to disable health tracking settings in the Placement builder.

-

-

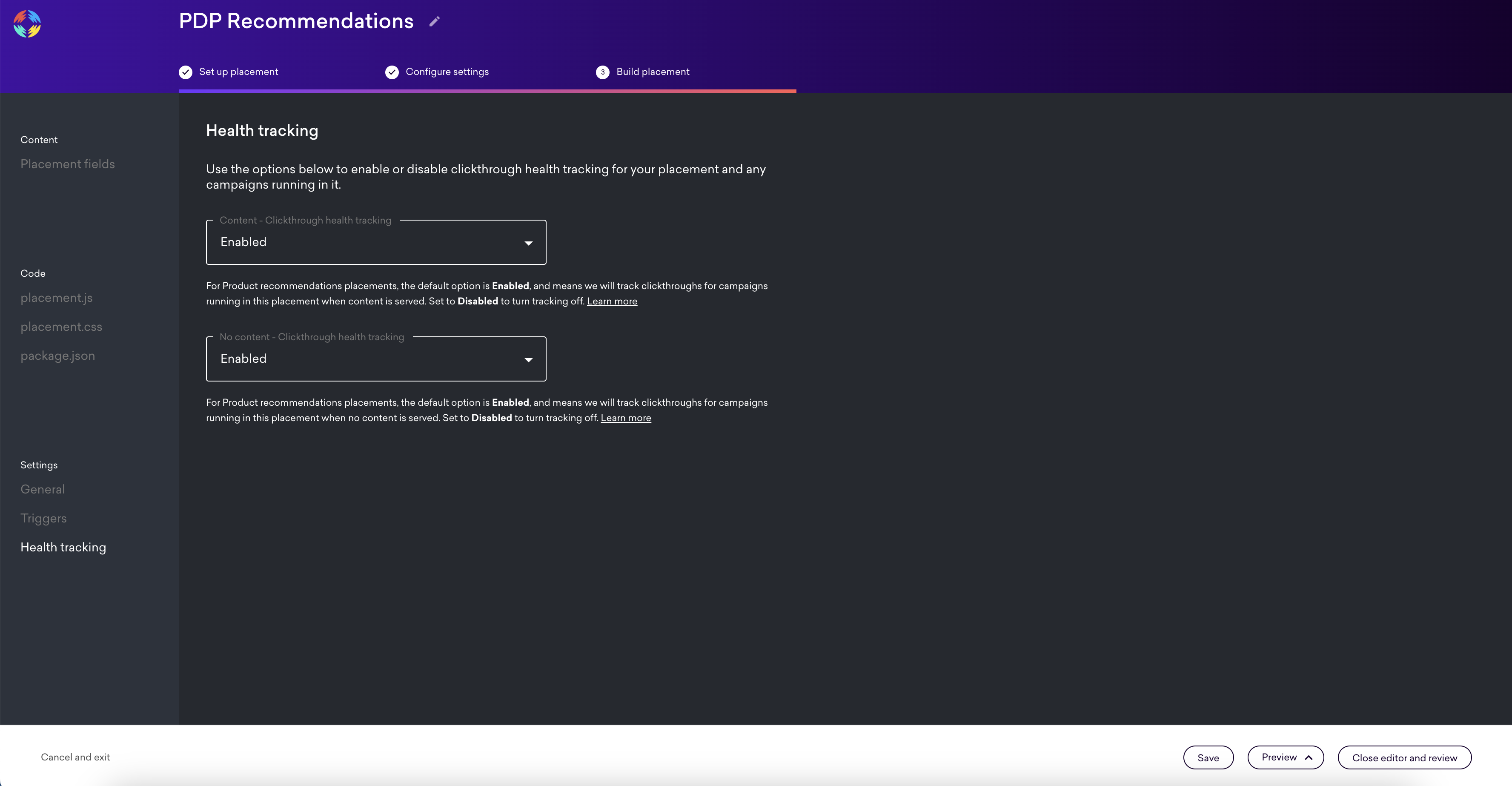

Preview your Placement with/without content depending on what events are missing:

-

Open the browser console, check that you see the Placement logs, which should give you some helpful information like page type triggers, poller, and event logs (see, as an example, that impression was already called for the screenshot below):

-

Click the clickable element of your Placement, and check whether the log for the event appears on your console. If your Placement redirects the click the same window, either select the 'Preserve log' option on your console or select Ctrl (Windows)/Command (Mac) + click to open in a new page:

-

If you don’t see this event in the console, you’ll need to debug the Placement code until you can successfully emit the clickthrough event

-

If you’re still facing issues, contact your Customer Success Manager or contact Coveo Support.

|

|

Note

Achieving a healthy score is no guarantee that clicks are being tracked in the right proportions. Unlike impressions, it’s not generally possible to validate clickthrough proportions (because they’re a target metric of an A/B test). |

|

|

Note

We don’t currently track "clicks greater than impressions," which is also unhealthy (typically indicates an underlying problem with impression tracking). |

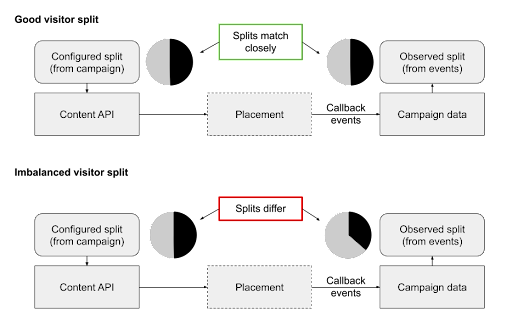

Bad visitor splits

By observing the visitor split from QP events and comparing it to the configured split (traffic split) in the Placement’s campaign, you can report any issues with your Placement when significant differences occur:

Differences in the observed and configured split indicate bias in the Placement’s implementation in favor of "content" or "no content."

When evaluating splits, you’ll use the Placement’s most recent campaign. You can’t evaluate splits when the Placement doesn’t have an active campaign running.

Resolving split imbalances

To resolve a traffic split imbalance, we recommend that you use the Placement developer:

-

Determine whether the split favors the control (no content) or the variant (content):

-

If the split favors the control, the developer should look into cases where impressions would be overreported when the Content API returns no content or where impressions would be underreported when it returns content

-

If the split favors the variant, the developer should look into cases where impressions would be underreported when the Content API returns no content or where impressions would be overreported when it returns content

-

-

Eliminate differences between the "content" and "no content" code paths before calling the impression callback. This task may include refactoring the Placement code to emit events in portions of the code path shared between "content" and "no content" (typically the beginning of the Placement).

See Best practices for avoiding bias for more information on removing bias.

|

|

To assess the significance of the difference between the observed and configured allocation, the Experience Hub use a chi-squared test at 99.9% confidence. This test closely approximates an exact multinomial test (with a preference for false negatives over false positives relative to an exact test). |

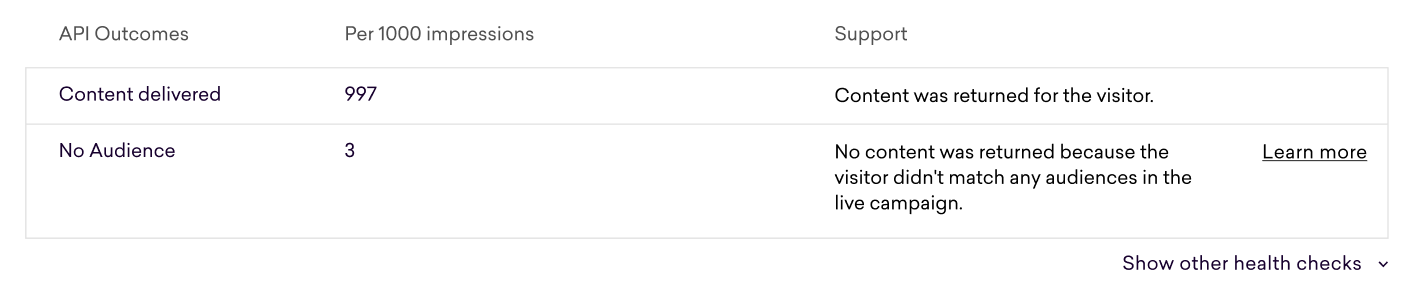

API Outcomes

The API Outcomes table describes the results of the Placement’s impressions over the last 48 hours. The frequency of each outcome is listed as a rate per 1000 impressions. You can use this data to understand how requests to the Content API are being interpreted, helping you monitor or debug a Placement.

The set of possible API outcomes is listed and explained below.

The codes listed can be seen when making callback requests with the debug parameter.

In the case where the outcome is unwanted, you can refer to the Placement and campaign remediation steps that the Experience Hub proposes.

|

|

Note

Outcome data is only available for successful callback requests. Any failed requests won’t contribute to the rates shown. |

| API outcome | Meaning | Placement remediation | Campaign remediation |

|---|---|---|---|

Content delivered (OK) |

Successfully returned content |

N/A |

N/A |

No campaign (NO_CAMPAIGN) |

No campaign live on the Placement |

Launch a campaign |

N/A |

No audience (NO_AUDIENCE) |

Request didn’t match any audiences in the campaign |

This is a normal outcome since many campaigns are designed to only target visitors in a specific audience. This outcome will occur for any visitor that doesn’t fall into a targeted audience. If the outcome’s occurrence is unexpectedly high, target the campaign to a broader audience. For example, add a fallback experience personalizing the Placement for ‘All visitors’. |

This is a normal outcome since many campaigns are designed to only target visitors in a specific audience. This outcome will occur for any visitor that doesn’t fall into a targeted audience. If the outcome’s occurrence is unexpectedly high, target the campaign to a broader audience. For example, add a fallback experience personalizing the Placement for ‘All visitors’. |

No seed (NO_SEED) |

Request matched a campaign with a seeded strategy or product badges, but no product was provided |

For injected Placements, ensure |

Pick an unseeded strategy or review your Placement. |

Too many seeds (TOO_MANY_SEED) |

Request matched a multi-badging campaign, but too many products were provided |

Ensure your page isn’t producing more than 100 |

Change your product source to one with fewer products, or review your Placement |

Product not in catalog (PRODUCT_NOT_IN_CATALOG) |

Product Placement uses a product that wasn’t found in the catalog |

Update the active campaign to ensure the product ID is present in the catalog. Alternatively, select a different product. |

Update the campaign to ensure the product ID is present in the catalog. Alternatively, select a different product. |

Product out of stock (PRODUCT_OUT_OF_STOCK) |

Product Placement uses a product that’s out of stock |

Update the active campaign to select a different product that’s in stock. |

Update the campaign to select a different product that’s in stock. |

No badge (NO_BADGE) |

Request didn’t match any badging rules |

This is a normal outcome since many badging strategies, such as Social Proof, don’t apply to all products.

If the outcome’s occurrence is unexpectedly high, update the active campaign’s badging rules to match more product requests.

If these steps don’t resolve the issue, consider these options:

For injected Placements, ensure |

This is a normal outcome since many badging strategies, such as Social Proof, don’t apply to all products. If the outcome’s occurrence is unexpectedly high, change your badging rules to match more product requests or review your Placement. |

No recommendations (NO_RECS) |

Internal request to Recs API didn’t return any recommendations |

Ensure your product catalog is healthy. Alternatively, try using a different strategy. |

Pick a different recommendations strategy. |

Too few recommendations (TOO_FEW_RECS) |

Internal request to Recs API didn’t return enough recommendations to meet the Placement’s minimum threshold |

Ensure the recommendation rules aren’t too restrictive. Alternatively, ensure your product catalog is healthy or try using a different strategy. |

Remove restrictive recommendations rules or pick a different recommendations strategy |

Bad locale (BAD_LOCALE) |

Request’s |

For injected Placements, ensure For API Placements, ensure |

Change the campaign locale to one matching the |

Bad strategy (BAD_STRATEGY) |

The campaign strategy is unsupported or unknown. |

Adjust the campaign to use a different strategy. |

Adjust the campaign to use a different strategy. |

No view type (NO_VIEWTYPE) |

View attribute is missing |

For API Placements, ensure that the |

Review your Placement. |

No content (NO_CONTENT) |

Experience Hub was unable to find or process the Placement’s JSON schema. |

Check the Placement’s schema and ensure it’s using valid JSON. |

Review your Placement. |

Content error (CONTENT_ERROR) |

One of the services used by Coveo internally returned an error. |

Please contact support |

Please contact support |

Internal error (CONTENT_ERROR) |

Something broke on our end. |

Please contact support |

Please contact support |

FAQ

General

How frequently is the data presented in Campaign and Placement Health messages updated?

It’s updated every 5 minutes. Technically, data is queried for each Placement when it’s loaded, then the response is cached for 5 minutes.

How far does the data look back?

Data is based on the last 48 hours. This timeframe was decided on the assumption that data from too far back isn’t needed to analyze current health.

Specifically for Placements, if there has been a new version published less than 48 hours in the past, the Experience Hub will look at that instead. The preference for certain data is indicated in the UI, where it’s stated "Since publishing it today" or "Since publishing it yesterday" instead of the default "Based on the last 48 hours." The only exception to this rule is the "API Outcomes" table, which always reflects the last 48 hours.

In the Placement health table, what’s the difference between "content" and "no content"?

If a visitor was served content in the session when the clickthrough, impression, or error event occurred, the Experience Hub reports it as a "Content" event. Conversely, if the visitor wasn’t served content, it’s reported as a "No content" event.

"Content" events are always associated with an active campaign. "No content" events are seen when:

-

The Placement has no active campaigns

-

Some visitors don’t match any audiences in the campaign

-

There’s a campaign running with a traffic allocation of 100% for all audiences

Is Campaign and Placement Health available in the Experimentation Hub?

Experience health has been a feature in the Experimentation Hub for a while.

placementError events emitted by experiences will appear in the Experience Hub.

JS errors

What does it mean when the JS error rate per 1000 impressions is over 1000?

In this case, the Placement is emitting more errors than impressions. This issue can be caused by erroneous code that occurs before the Placement impression callback event is fired. For example:

module.exports = function renderPlacement ({

content,

elements

}) {

throw new Error('Immediate error')

onImpression()

...

}Can I look at errors for previous revisions?

No. The purpose of the errors CSV is resolution, not maintaining history. If necessary, errors from previous Placement revisions can be queried in BigQuery.

Is error data stored in BigQuery?

Yes, the errors table is called event_qubit_placementError and lives in the "qubit-vcloud-eu-prod" project.

The hourly counts are stored in materialized views called agg_placement_clickthroughs, agg_placement_errors, and agg_placement_impressions in the "qubit-vcloud-eu-proc-prod" project.

Impression events

How’s the health of the impression events calculated?

The evaluation of the health state is based on the Placement’s current usage. The checks are based on:

-

Whether the Experience Hub is receiving "content" or "no content" impressions

-

Whether there’s an active campaign using the Placement

-

Whether the active campaign using the Placement has a control

-

Whether the active campaign using the Placement has the replace control feature enabled, that is, whether it’s a single- or multi-variant campaign

Refer to the following table for further details for how the Experience Hub reports the health status based on impression events:

| Campaign state | No content | Content | Health status |

|---|---|---|---|

No campaign |

impressions >0 |

n/a |

Good |

No campaign |

0 |

n/a |

Bad |

Single-variant campaign |

impressions >0 |

impressions >0 |

Good |

Single-variant campaign |

0 |

impressions >0 |

Poor |

Single-variant campaign |

impressions >0 |

0 |

Poor |

Single-variant campaign |

0 |

0 |

Poor |

Multi-variant campaign |

n/a |

impressions >0 |

Good |

Multi-variant campaign |

n/a |

0 |

Poor |

100% Campaign |

n/a |

impressions >0 |

Good |

100% Campaign |

n/a |

0 |

Poor |

Bad splits

How does the Experience Hub determine if a Placement has an imbalance in traffic (bad split)?

The Experience Hub uses a statistical test called a chi-squared test. This test takes as inputs the number of unique visitors in a campaign’s control and variant and its intended split. This test outputs a value between 0 and infinity. The higher the value, the more we believe that the Placement’s logic leads to visitor bias. We declare a bad split when the value is over 10.8, which means we’re at least 99.7% sure there’s bias.

What causes bad splits?

Bad splits are caused when the conditions under which the impression callback (onImpression() in placement.js) is called differ between the control and variant.

We show examples of code that could induce bias.

To remove bias, the best practice is to keep Placement code "common" between the content and no content cases until the impression callback is called.

Clickthrough events

How to know if a Placement should be getting clickthroughs?

That will need to be decided by the developer or business user and will depend on a few factors, such as Placement type and whether there’s a link to click. It can also vary between when the Placement displays content and when the content is empty.

Do you need to republish Placements after making changes to clickthrough health settings?

No, you don’t. The clickthrough health settings take effect immediately. The clickthrough health status of the Placement depends on the clickthrough event counts, the type of the campaign running in the Placement, and the settings.

What happens when the clickthrough health settings are different for content/no-content?

The health checks are performed separately for the number of events emitted when the Placement was displaying content and when the Placement was shown without content.

For example, imagine a Placement adding a clickable link/button to the page only when content is displayed (when a campaign is live) but not changing the page when there’s no content (when no campaign is live). For this Placement, you would enable clickthrough health tracking when there’s content and disable it when there’s no content because no clicks are expected when the Placement is empty.

How to configure a Personalized content Placement with optional URL fields when only some campaigns generate clickthroughs?

Currently, the Experience Hub provides clickthrough health settings on the Placement level only. For this reason, you should set those health settings to disabled for a Placement with optional URL fields. Clickthrough health won’t be tracked, but this avoids false-positive warnings for campaigns not using the optional URL.

Why are the clickthrough health settings defaults different for each type of Placement?

Certain types of Placements are clickable or not clickable more often than not. For example, badging Placements are usually non-clickable, and recommendation Placements are clickable. The defaults reflect that pattern, but the settings can be changed for every Placement.

Can you leave your settings as "Not set"?

That’s not recommended and may cause critical warnings in future versions of the Experience Hub. Pre-existing Placements can’t be automatically assigned those health tracking settings, and for this reason, we ask you to specify the settings manually.