Optimize Snowflake reader account credits

Optimize Snowflake reader account credits

When working with a Snowflake reader account, understanding how to efficiently query data can significantly impact your credit usage.

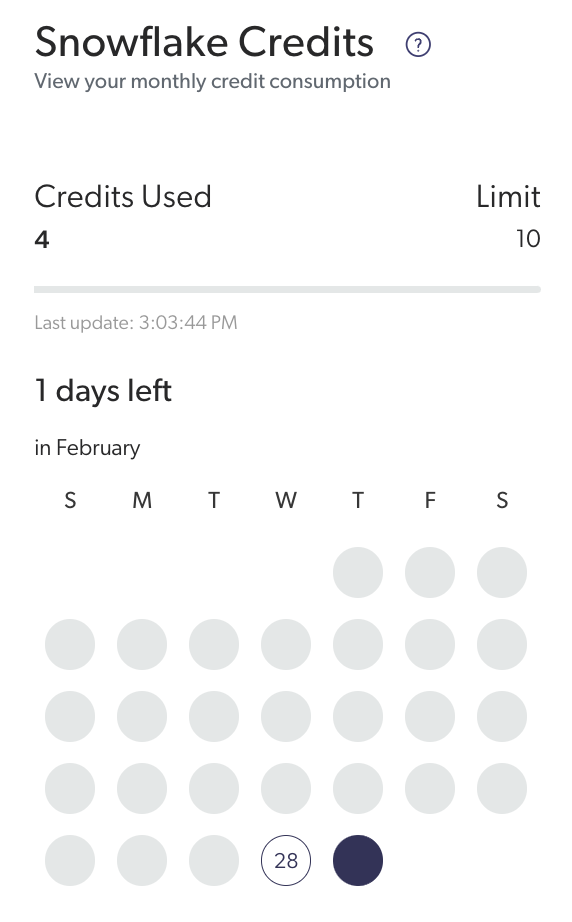

Reader account users are typically allotted ten Snowflake credits a month. Snowflake credits are the unit of measure representing the amount of data processed. The number of credits used is determined by the size of the data and the complexity of the query.

This article provides tips on how to optimize your credit usage and get the most out of your reader account.

Leading practices

-

Snowflake credit usage is impacted by query processing time, and a query’s complexity can greatly vary. It’s therefore important to structure the query accordingly.

A well-optimized query may fetch one million rows in seconds, while a less optimized query may take minutes to fetch a single row. The key to efficiency and reducing credit usage lies in the strategic selection and structuring of your queries.

-

Consider using a query that allows Snowflake to directly stream data to your application. Essentially, you would transfer the data from one place to another without processing it. This approach is more efficient for a few reasons:

-

It reduces the amount of data processed, which in turn reduces the amount of credits used.

ExampleBarca Sports has a reader account with ten Snowflake credits a month. However, they notice that upon running a query to retrieve the sales data for top-selling products in each category over a specific period, along with the average sale amount per product, that they’re using five credits in one day which means that they will reach their limit before the end of the month.

As a result, they revise their queries to focus on direct data streaming. Instead of processing data within Snowflake, they write queries that allow Snowflake to stream data directly to their analytics application. This approach reduces the computational load on Snowflake and the amount of credits used.

-

If there was an error in the data before streaming it, you would be able to fix it before it’s transferred to your application. If you’re not streaming raw data and instead processing it in Snowflake, you would have to fix the error and re-run the query, which would use more credits.

-

-

Data in Snowflake is stored in micro-partitions. These micro-partitions are based on date and time, therefore it’s a good practice to use the date and time to filter the data. For example:

SELECT* FROM TABLE_NAME WHERE DATE_TIME BETWEEN 'YEAR-MONTH-DAY' AND 'YEAR-MONTH-DAY';You can use this query to filter the data, potentially encompassing up to 100 million rows. Depending on your database, the amount of rows may vary between days, months, or years, therefore we recommend calculating the amount of data you’re working with before running the query.

-

Avoid filtering queries solely by columns (other than

DATE_TIME). For example, filtering bysearchHubonly would likely use more credits and also slow the transfer down.Selective column filtering is a good way to reduce the amount of data processed and conserve credits. When you filter by

DATE_TIME, you’re only processing the data that you need (in other words, only the required micro-partitions), which reduces the amount of credits used.However, when you use a column that has a high cardinality, such as

searchHub, you’re processing a large amount of data since Snowflake processes all the micro-partitions on each query, which will use more credits. -

Avoid operators that reorganize the data, such as

ORDER BYandGROUP BY. You can use these operators to organize the data in your application, which will save you credits. -

Avoid using the

LIMIToperator on its own. While this operator would technically reduce the volume of data processed, you would run the risk of duplicate rows depending on the number of rows included in the date range.Using

LIMITby itself can lead to unpredictable outcomes, as the data returned may vary with each execution without a specific order. Furthermore, pairingLIMITwithORDER BYmay provide ordered results but there may be a performance impact if the ordering column isn’t indexed or if the query is complex. -

Before running a query that includes a larger amount of rows, test your network speed as well as the capacity of the application you’re using in the data transfer process.

The speed of your network can impact the amount of time it takes to transfer the data, which in turn impacts the amount of credits used. A slower network could result in timeouts, which would require you to re-run the query. Meanwhile, the capacity of the application you’re using to transfer the data could also impact the amount of time it takes to transfer the data.

Therefore, performing test runs before running the query can help you gauge the how your overall data transfer infrastructure will impact your credit usage.

-

Avoid creating complex keys. Most tables have a primary key that ensures that each row is identifiable. However, if you need a unique primary key, a recommended guideline is to create a single column key instead that automatically assigns a unique identifier to each row.

-

Review your queries. Use the Query History page in Snowsight to review your queries and identify if any of them are using a large amount of credits. This page offers insight into query performance and execution patterns, which can help you identify areas for improvement. For more information, see Monitor query activity with Query History documentation.